Table of Contents

- The Pulse of the Industry: Why the 2025 DORA Report Matters

- AI as an “Amplifier” and the “Trust Paradox”

- We’re Accelerating, but “Instability” Is Rising

- Our Additional Research: Speed vs. Stability in Turkish Teams

- The Formula for Success – “DORA AI Capabilities Model”

- How to Rapidly Integrate the 7 DORA Capabilities into Your Team

- Maximizing the Impact of AI Investments Through Platform Engineering and Value Stream Management

- From “Using AI” to Strategically “Managing AI”

Share

Over the past 12 months, the world of software development has not merely innovated—it has undergone a revolution. AI, once seen as an “interesting plugin” or “helper tool” in our code editors, has now become a new operating system underpinning the entire development lifecycle. This transformation not only boosts individual productivity but also reshapes organizational decision-making, team dynamics, and the very way software creates value.

Just a few years ago, technology leaders were still asking: Should we use generative AI, or should we remain cautious due to security and accuracy risks? Are code assistants truly safe? By 2025, these questions have been replaced by a more complex and urgent matter: How can we generate tangible value from this transformation, and how can AI be positioned not just as a tool, but as a sustainable strategic asset?

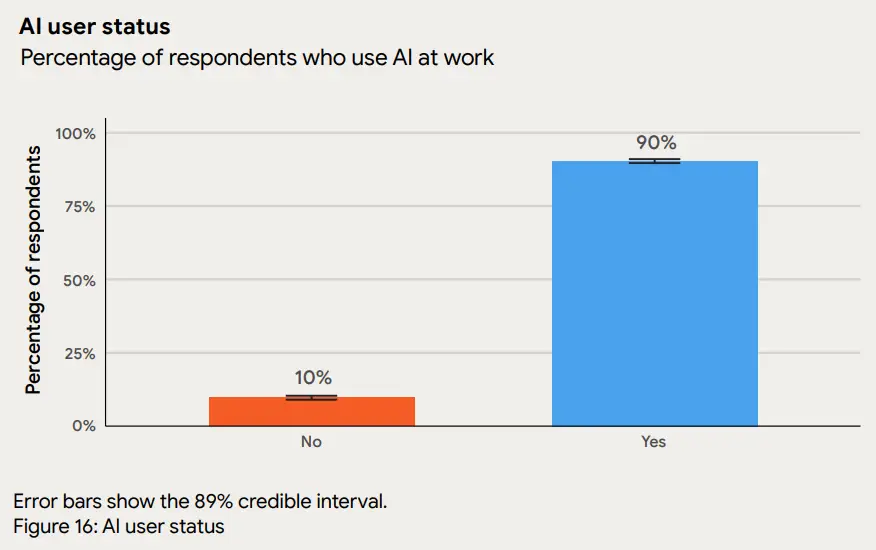

The 2025 DORA Report, published by Google Cloud and considered the industry’s gold standard, provides insight into these questions while clearly revealing the current state. The report shows that 90% of technology professionals are actively using AI in the workplace, indicating that adoption has moved beyond early adopters to mass-scale integration. The central question is no longer whether to use AI, but how to leverage it most efficiently and strategically to generate value.

However, universal adoption brings complex challenges. Teams integrating AI into their workflows may see apparent productivity gains, yet technical debt accumulates. Code is produced faster, but testing and delivery pipelines struggle to keep up. While individual productivity rises significantly, organizational performance does not always reflect these gains. DORA reports identify these discrepancies as “local optimization traps”—developers may be efficient in their own workflows, yet the organization as a whole may fail to capture the expected value. This underscores that using AI as a tool is easy, but managing it strategically is highly complex.

This article aims to analyze the findings of the DORA Report in depth, guiding readers beyond a user perspective to that of a system manager and strategy designer. Our goal is to manage the chaos created by rapid AI adoption, measure progress using the right metrics, and position AI not merely as an individual productivity booster but as an amplifier of sectoral leadership**. Within this framework, we will explore the opportunities and risks AI presents from a holistic perspective and provide strategic recommendations for both leaders and developers.

The rise of AI as a “new operating system” in software development represents not just a technical shift but a cultural and organizational transformation. Team workflows, leadership decision-making, and the organization’s value-creation model must all be revisited. The 2025 DORA Report illustrates the speed and impact of this transformation with data, showing that when applied strategically, AI can evolve from a simple productivity tool into a system that strengthens the entire organization.

The Pulse of the Industry: Why the 2025 DORA Report Matters

In the technology world, the line between “opinion” and “data” is often blurred. Hundreds of trend reports are published every year, yet very few actually shape the direction of the industry. For over a decade, DORA has provided a clear signal amid this noise. It is more than a report; it is the gold standard through which organizations measure their own performance and understand what “good” truly looks like. In the past, DORA defined the classic “Four Key Metrics” of DevOps, uncovering the DNA of high-performing teams. Metrics such as lead time for changes, deployment frequency, mean time to recovery, and change failure rate have long served as industry benchmarks, enabling organizations to compare their strengths and weaknesses.

The 2025 “AI-Enhanced Software Delivery” Report goes beyond simply capturing a snapshot of the current state. It offers a roadmap for leaders and engineering managers in the industry. This report is not a superficial analysis based on the opinions of a few hundred people; it is a comprehensive study built from data collected from approximately 5,000 technology professionals worldwide, supplemented by over 100 hours of qualitative interviews. As a result, the report provides a perspective on the impact of AI on software development grounded in real data, moving beyond hype and speculation. This rigor makes the report not just a statistical reference, but a trusted guide helping the industry navigate complex uncertainties.

The years 2024 and 2025 mark a period of rapid growth for generative AI. While this pace presents major opportunities for individual developers, it also introduces new uncertainties at the organizational level. Although roughly 90% of the industry has integrated AI into daily workflows, the translation of this usage into tangible organizational outcomes remains unclear. Many leaders observe that while their teams are more productive, the expected acceleration in software delivery or system stability is not always realized. Individual productivity surges do not automatically translate into organizational performance; these situations, termed “local optimization traps”, create a new strategic challenge for leaders.

The 2025 DORA Report provides a roadmap amidst this complexity. Designed to answer leaders’ most critical questions, it emphasizes that generating value from AI extends beyond mere tool acquisition; cultural and technical competencies are equally vital. To derive value from AI, organizations must align not only technology investments but also organizational structures, workflows, and leadership approaches. The report’s DORA AI Competency Model outlines the pillars for extracting value from AI and serves as a strategic document that should sit on every leader’s desk. This model provides a critical framework enabling organizations not only to boost productivity but also to convert AI into a sustainable competitive advantage.

In short, the 2025 DORA Report stands as the first reliable map of the AI-Enhanced Software Delivery era. Understanding and managing this new “operating system,” which the majority of the industry is moving toward, enables organizations to do more than gain individual productivity improvements—it strategically strengthens the entire organization. The report equips technology leaders and managers to navigate uncertainty confidently, using data-driven and effective approaches. It is now time to take a deep dive into the first and most striking findings highlighted by this map.

AI as an “Amplifier” and the “Trust Paradox”

The executive summary of the 2025 DORA Report emphasizes a single word: Amplifier. This term illustrates that AI is not a magic wand that fixes all shortcomings. Instead, it magnifies the characteristics of existing systems, making both strengths and weaknesses more visible. The report clearly defines AI’s fundamental role in software delivery: in high-performing organizations, it exponentially enhances existing strengths, while in weak or fragmented systems, it exposes and amplifies deficiencies. AI acts as an accelerator of organizational performance, but the outcome—positive or negative—depends entirely on the robustness of the underlying system.

This finding urges technology leaders to rethink their AI investments. The report makes it clear that ROI is determined not just by the tools used, but by the quality of the organizational system into which these tools are integrated. In organizations with solid workflows, cohesive teams, and high-quality internal platforms, AI can transform these strong foundations into extraordinary speed and quality. Conversely, in organizations with fragmented workflows, weak team coordination, and inconsistent tool chains, localized productivity gains from AI may dissipate into downstream chaos, negatively impacting overall system performance. In this context, AI does not just build a skyscraper; it reveals how quickly a weak foundation can crack.

The report identifies one of the most striking contradictions arising from this amplifier effect as the “Trust Paradox.” Globally, around 90% of organizations have integrated AI tools into daily workflows, yet trust in the outputs of these tools is not equally high. Only about one-quarter of respondents reported “high” or “very high” trust in AI-generated outputs, while roughly 30% expressed little or no trust. This indicates that professionals use AI extensively but question the accuracy of its outputs.

DORA does not interpret this as a failure. On the contrary, it is seen as a sign of mature adoption. Developers do not blindly rely on AI; they apply a “trust but verify” approach, critically reviewing outputs just as they would code from Stack Overflow or a peer’s review. AI outputs are treated as raw material, not finished products. This perspective positions AI not merely as a productivity booster but as an organizational amplifier that tests discipline and quality.

The takeaway for leaders is clear: low trust in AI is not a problem. The solution is not to force teams to trust AI more, but to improve the system underlying AI —strengthen internal platforms, improve data quality, and solidify workflows. When the system is reliable, AI-generated outputs become naturally more trustworthy, enabling organizations to experience the positive effects of AI as a true amplifier.

We’re Accelerating, but “Instability” Is Rising

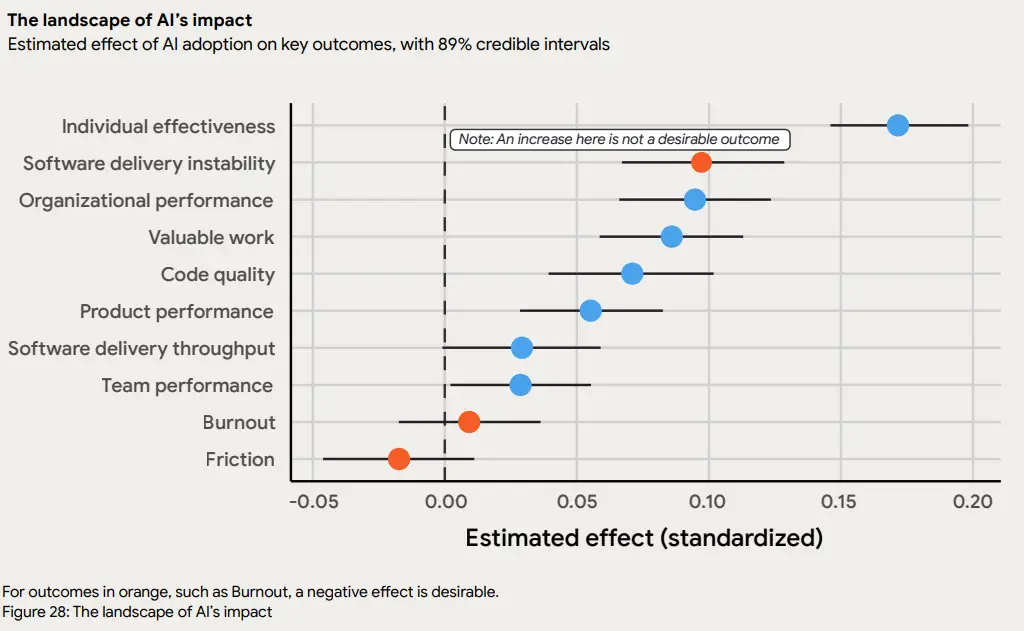

The 2025 DORA Report evaluates AI’s impact on software development not only in terms of speed and productivity but also through the lens of system stability and quality. Last year’s findings suggested that AI adoption could actually slow software delivery, creating a tension between promised efficiency and practical outcomes. The 2025 report provides a clearer perspective, showing that when used correctly, AI can drive measurable acceleration in delivery processes. However, this speed comes with new risks and fragilities.

The Reality of Acceleration: Throughput Is Increasing

The first key finding of the report highlights that AI adoption now produces measurable speed gains. Tasks such as code completion, test scenario creation, documentation, and code reviews can be completed much faster with AI tools. These gains are not limited to “perceived productivity” experienced by individual developers; they also reflect measurable improvements in software delivery throughput, one of DORA’s classic metrics.

This speed increase stems from effective integration of AI into team workflows. Routine and repetitive tasks that were previously manual are now automated, allowing teams to focus on creative and strategic work. In this sense, acceleration is not only an operational improvement but also a catalyst that enhances organizational value creation capacity.

The Cost of Speed: Rising Instability

Every gain has a cost, and speed is no exception. According to the report, instability in software delivery continues to increase.

Instability is measured through two key metrics:

- Change Fail Rate (CFR): The frequency at which changes deployed to production result in failures.

- Rework Rate: The proportion of unplanned deployments required to fix issues in production.

AI-driven acceleration negatively impacts these metrics. Code is produced faster, but more errors occur in production, requiring urgent fixes and unplanned interventions. In other words, speed improves productivity in the short term, but instability increases organizational risk and operational cost.

The Amplifier Effect and System Maturity

Behind this paradox lies the “amplifier” effect detailed in DORA’s previous section. AI’s accelerating power magnifies instability if existing systems cannot support the increased pace.

- Mature systems: In organizations with clear workflows, cohesive teams, and robust infrastructure, AI increases productivity while minimizing instability.

- Weak systems: In fragmented organizations with insufficient governance, AI amplifies errors and unplanned interventions alongside speed.

The report summarizes: “Teams adapt to speed faster than they can safely manage underlying systems.” Providing high-speed tools alone is insufficient; infrastructure, workflows, and governance must evolve in parallel.

Leadership Takeaways: Balancing Speed and Quality

The findings deliver a clear message for technology leaders: AI is no longer just a tool; it must be managed as a strategic accelerator. Without sustainable and safe acceleration, productivity gains remain short-term wins, increasing team fatigue, technical debt, and delivery unreliability over time.

Recommended actions for leaders include:

- Ensuring AI-accelerated workflows align with infrastructure and processes.

- Redesigning CI/CD pipelines, testing, and review mechanisms to handle increased velocity.

- Continuously monitoring process metrics, not just output speed, to track instability.

- Creating roadmaps that optimize both speed and quality, training teams in end-to-end processes, not only tool usage.

In summary, the 2025 DORA Report presents a clear picture: AI’s power can deliver extraordinary efficiency when deployed on robust systems, but without system maturity, speed comes with instability. Managing this balance has become one of the most critical responsibilities for today’s technology leaders and engineering managers.

Our Additional Research: Speed vs. Stability in Turkish Teams

Globally, the 2025 DORA Report identifies a paradox: AI increases throughput but also increases instability. While this holds true internationally, its effects are sharper and more pronounced in Turkey.

Turkey’s tech ecosystem—particularly in e-commerce, fintech, gaming, and “super-app” verticals—is built around a speed-focused culture. Rapid deployment of new features, competitor integrations, or campaigns often trumps long-term robustness and technical debt concerns.

Adding AI as an “amplifier” accentuates the pressure to deliver quickly, significantly increasing the instability risk foreseen by DORA.

Faster Technical Debt Accumulation

DORA highlights the importance of trust but verify with AI outputs. In Turkey, deadline pressure often skips this verification step. AI-generated code, a raw material, is sometimes deployed without proper testing or architectural review, accelerating the accumulation of technical debt. This has a direct negative effect on DORA’s Rework Rate metric. While speed provides short-term productivity gains, long-term sustainability is at risk.

Verification Bottleneck Pressure

Previously, we noted that AI shifts the bottleneck from writing to verification. In many Turkish companies, automated tests and thorough code review processes are still maturing. Sudden increases in AI-generated code volume can overwhelm these systems. Reviews are either superficial or skipped entirely, leading to sharper rises in Change Fail Rate than global averages.

This observation underscores that balancing speed and verification is critical in Turkey. Accelerating before systems mature triggers instability.

“Hero Developer” Model Supercharged

Turkey’s tech culture often relies on “hero developers” to deliver results. Success is measured by individual performance, not system resilience. AI enables these heroes to code faster, creating short-term “superhero” productivity, but this conflicts with DORA’s principles of sustainable systems and collective code ownership. High individual productivity may mask declining system stability. If these key developers leave or AI-generated code needs maintenance, the organization faces massive technical debt and unmanageable instability.

Leadership Implications: Speed, Systems and Sustainability

The “speed vs. stability” paradox in DORA is an urgent operational challenge in Turkey. A speed-driven culture combined with AI’s amplifier effect increases system fragility, turning short-term wins into long-term risks.

Leaders should:

- Rapidly align workflows and governance mechanisms with AI acceleration.

- Strengthen automated testing and code review processes to manage verification bottlenecks.

- Continuously monitor technical debt and instability metrics to anticipate risks.

- Reduce reliance on hero developers and build collective responsibility and sustainable systems.

The Formula for Success – “DORA AI Capabilities Model”

In previous sections, we examined the 2025 DORA Report’s most critical paradox: while AI increases productivity (throughput), it weakens system stability and amplifies fragility risk. This presents a serious threat to the long-term sustainability of software teams. So how can organizations resolve this dilemma? How can they build stable systems without sacrificing speed?

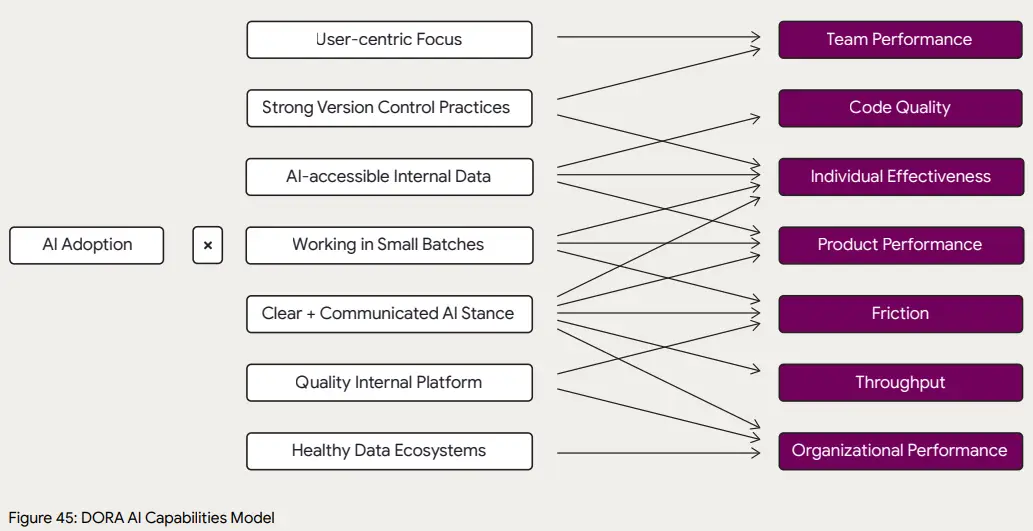

The report’s final sections introduce the “AI Capabilities Model”, which directly addresses this question. The model positions AI not merely as a technical tool but as an organizational maturity test. In other words, AI’s success depends not on the model itself but on the maturity of the system in which it operates. DORA’s findings frame AI as a catalyst for internal transformation, emphasizing that the right organizational foundations are key to unlocking its full potential.

Purpose and Scope of the Model

The DORA AI Capabilities Model defines the core organizational capabilities required for organizations to derive scalable, sustainable, and secure value from AI. The model positions AI as an amplifier: weak infrastructure magnifies flaws, while strong systems convert AI’s power into transformational momentum.

The report identifies seven core organizational capabilities as critical determinants of AI success. Each capability plays a crucial role in balancing speed and stability and enabling high-performing team outcomes.

Clear and Communicated AI Stance

This capability ensures that teams understand how and under what conditions AI should be used.

A clear AI stance eliminates uncertainty and provides rapid answers to critical questions such as

“Which AI tools can I use?”, “Which data, especially sensitive internal data, can be shared with these tools?”, “What are the security and privacy expectations?”

DORA emphasizes that a clear AI stance also mitigates the “trust paradox”, allowing teams to act confidently without hesitation.

Healthy Data Ecosystems

AI models are only as strong as the data that feeds them. The report highlights that high-quality, unified, and accessible data ecosystems are foundational for AI to enhance organizational performance. Data silos or disorganized sources limit AI’s effectiveness, while well-structured and efficient data ecosystems maximize AI’s amplifier effect.

AI-Accessible Internal Data

Beyond healthy data ecosystems, AI must have access to internal systems—including API documentation, codebases, and knowledge repositories like Confluence or Jira. Internal context allows AI outputs to be more accurate, relevant, and strategic, enhancing decision-making and operational efficiency.

Strong Version Control Practices

As AI accelerates productivity, managing outputs becomes equally critical. Version control ensures that AI-generated code is traceable, reversible, and reviewable, providing a safety net to reduce instability. Strong practices align team coordination with AI-driven output and mitigate technical debt risks.

Working in Small Batches

AI can produce hundreds of lines of code at once. DORA recommends smaller, manageable batches to isolate errors and reduce Change Fail Rate. Working in small increments ensures speed without sacrificing stability and allows teams to review and test outputs efficiently.

User-Centric Focus

Teams must focus on delivering value to users, not just producing code faster. Aligning AI outputs with user needs transforms productivity gains into strategic outcomes. User-centricity acts as a compass for AI-driven teams, ensuring speed translates into meaningful results.

Quality Internal Platforms

Robust internal platforms, developed via Platform Engineering, provide the “highway” that sustains AI-driven speed. Standardized, automated, and secure tools across testing, deployment, and infrastructure allow teams to fully leverage AI’s advantages while maintaining stability.

Conclusion: The System that Feeds the Amplifier

These seven capabilities form the organizational system that powers AI and maximizes its value. DORA clearly states that organizations lacking these capabilities cannot fully benefit from AI, and may even see existing problems—like instability or chaos—amplified. In essence, AI amplifies the strength of mature systems and exposes the weaknesses of immature ones.

How to Rapidly Integrate the 7 DORA Capabilities into Your Team

The 2025 DORA Report clearly defines the organizational capabilities needed to extract real value from AI. These capabilities are not just individual productivity tools but strategic pillars that strengthen the entire organizational system. Below is a practical guide for applying each capability, with a focus on Turkish tech teams.

Clear and Communicated AI Stance

Uncertainty in strategy directly impacts decision-making. In AI contexts, ambiguity arises around tool usage, data sharing, and security/privacy boundaries. Fast-growing Turkish e-commerce and fintech teams operate under high-speed delivery pressure, which magnifies these risks.

DORA emphasizes that a clear AI stance provides psychological safety, supporting the “trust but verify” approach. In practice, this means clearly communicating which AI tools are approved, which are restricted, and rules for sharing sensitive internal data. Establishing responsibility lines ensures teams mitigate operational errors and maintain code stability.

A clear stance also positions AI as a strategic enabler, not just a productivity tool. Teams can produce faster and maintain security and quality standards, which is critical in highly competitive sectors.

Healthy Data Ecosystems

AI effectiveness depends on the quality and integration of underlying data. A healthy ecosystem involves clean, validated, and interconnected data, breaking silos and standardizing management processes.

In Turkey, many tech teams are still maturing in data integration and documentation, which limits AI output reliability. A healthy data ecosystem ensures AI suggestions are accurate, actionable, and aligned across departments. Standardizing formats and documenting processes also reduces error rates and stabilizes operations.

AI-Accessible Internal Data

Once a healthy data ecosystem exists, AI must access internal corporate data. Without it, outputs remain generic and context-free.

DORA highlights that internal data access dramatically improves output relevance and accuracy, speeding processes and delivering measurable business value. For example, in e-commerce, enabling AI to access product catalogs and stock information ensures recommendations or automated updates are correct and actionable.

Strong Version Control Practices

Accelerated AI output without robust version control increases instability and technical debt. Many Turkish teams rely on basic version control and manual processes, risking untracked changes, conflicting commits, and higher error rates.

DORA stresses that strong version control guides AI’s amplifier effect positively. Traceable and standardized commits, e.g., tagging AI-generated code with prefixes like “AI-Gen” or “AI-Refactor,” facilitate rollback, review, and coordination. Optimized branching and PR strategies allow teams to gain speed while maintaining quality.

Working in Small Batches

AI often produces large code blocks, which can complicate error detection. DORA recommends breaking outputs into small, testable modules.

In practice, Turkish speed-driven teams often produce large feature PRs. Splitting AI outputs into smaller units makes review, testing, and deployment safer, reducing Change Fail Rate and Rework Rate. For example, prompting AI to divide a 500-line block into three independent functions allows controlled productivity without destabilizing the system.

User-Centric Focus

Speed alone does not guarantee success; outputs must deliver real user value. Aligning AI with user stories ensures outputs support business and UX goals, not just technical correctness.

For instance, in fintech, coding a secure password reset workflow ensures AI-generated code meets functional and user expectations. Adding user stories to AI prompts guarantees meaningful, value-driven results.

Quality Internal Platforms

Sustaining AI-driven speed requires robust, automated internal platforms. These standardize testing, deployment, and security processes.

In Turkey, many teams lack such standardized platforms; AI outputs may exceed system capacity. DORA notes that high-quality platforms convert AI’s speed into sustainable stability. Optimizing CI/CD pipelines, test processes, and security controls ensures teams detect and fix errors rapidly, while providing a “Golden Path” for new team members.

Practical implementation includes documenting standards and teaching AI the internal processes, enabling teams to develop quickly, safely, and autonomously.

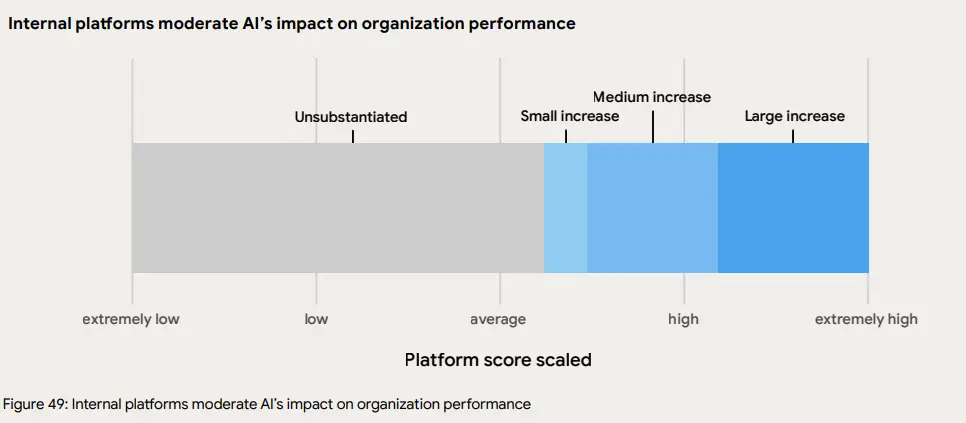

Maximizing the Impact of AI Investments Through Platform Engineering and Value Stream Management

The role of AI in software development requires managing a complex balance between speed and stability. AI can significantly boost team productivity, but this speed must be managed safely and sustainably—only achievable through investments in technical infrastructure and strategic processes. Platform engineering and value stream management (VSM) emerge as two critical capabilities that directly shape the value derived from AI.

Technical Foundation: Platform Engineering

Platform engineering standardizes and automates software development processes, including testing, security, deployment, and infrastructure. It provides developers with a self-service environment that meets their operational needs. Today, platform engineering is no longer a luxury for large enterprises but a fundamental necessity for all technology organizations.

Its importance is directly linked to AI’s amplifier effect. AI can increase code production volume by up to five times in a short period; however, without sufficient infrastructure and processes, this speed can lead to instability. Platform engineering ensures safe management of AI-driven acceleration, enabling automated testing, security checks, builds, and seamless deployment to production.

Platform engineering is not just a technical requirement—it is a force multiplier for organizational performance. A high-quality internal platform amplifies AI’s corporate impact, while a weak platform limits effectiveness and increases instability risk. Leaders must ensure that investments in AI tools are matched by robust platform engineering infrastructure capable of handling the increased workload.

Strategic Compass: Value Stream Management (VSM)

VSM visualizes and optimizes all steps from an idea or user request to delivered customer value. It serves as a strategic compass to direct AI-generated speed efficiently and effectively.

While AI boosts productivity, applying this speed in the wrong areas can increase inefficiencies. VSM identifies bottlenecks, delays, and unnecessary steps, transforming AI’s acceleration into real business value. Organizations with strong VSM practices can realize medium to high returns from AI investments, whereas organizations lacking VSM may see minimal impact. Leaders should therefore strengthen VSM in parallel with AI initiatives to maximize outcomes.

Strategic Takeaways for Leaders

Platform engineering and VSM are two essential levers for ensuring AI investments deliver sustainable value:

- Platform engineering ensures that high-speed code production is secure and stable.

- Value stream management ensures that speed is applied effectively, translating into measurable organizational performance.

Organizations must invest not only in AI licenses but also in technical and process infrastructure capable of supporting the increased workload AI generates. Integrating these capabilities transforms AI from a mere acceleration tool into a strategic instrument for sustainable, measurable business value.

From “Using AI” to Strategically “Managing AI”

AI has moved beyond being a mere auxiliary tool in software development. Initially, teams used AI primarily to increase speed and productivity, leveraging it for code completion, test scenario generation, documentation, and other routine tasks. However, analysis and experience indicate that real value from AI emerges not just from usage, but from strategic management. Organizations must transition from being tool users to becoming system designers who integrate AI holistically and systematically.

Guiding the Amplifier Effect of AI

AI acts as an amplifier for existing organizational processes and capabilities. Strong infrastructure, healthy data ecosystems, and clear workflows allow AI to enhance these strengths. Conversely, weak systems magnify existing vulnerabilities, deepening instability. Leaders must shift focus from AI as a “faster coding tool” to strengthening supporting systems, creating a strategic advantage that ensures both productivity and long-term sustainability.

Creating Sustainable Value Through Strategic Management

Managing AI requires not only defining usage guidelines but also optimizing organizational capabilities holistically, including data quality, platform engineering, version control, and value stream management. This approach balances speed and stability, allowing teams to produce rapidly without compromising quality or security.

Moreover, user-centric focus and systematic testing ensure that AI-generated outputs translate into tangible business value, driving measurable performance improvements across the organization.

Transitioning from Tool User to System Designer

Using AI alone limits organizations to short-term gains. A strategic management approach transforms AI into an organizational advantage: teams can balance speed with quality, minimize technical debt, and build sustainable innovation capacity. Leaders and teams should view AI not merely as a task acceleration tool but as a strategic instrument that enhances system performance and aligns with organizational objectives.

This perspective represents both a technological and cultural shift. Organizations that design and manage AI strategically can achieve competitive advantage and long-term value creation.

Resources

“Writing is seeing the future.” Paul Valéry